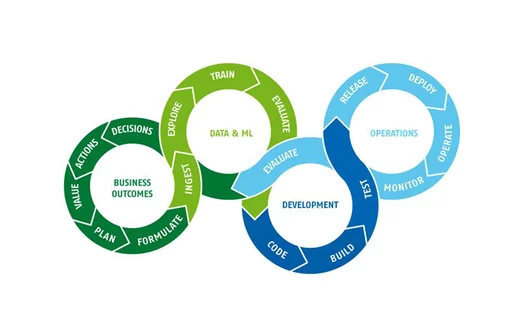

In general, we see more and more organizations extracting value from their business data. They want to apply and test that added value as quickly as possible. MLOps is the most obvious way to do this, but where do you start?

MLOps in practice: how do you quickly extract value from data?

Organizations can adopt MLOps as a methodology to quickly extract value from their valuable data. In our earlier article, MLOps: for a perfect Machine Learning Pipeline, we briefly discussed the components of this methodology. In this article, we take a closer look at these components and talk about who should play which role at what time.

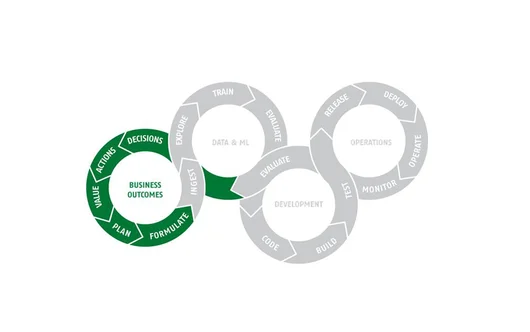

Business Outcomes

The MLOps Pipeline begins with a well-formulated use case, in which the desired Business Outcomes are clearly articulated. The department head or business manager takes the lead* in identifying the need for the use case. He or she is the owner of the use case and decides when to start the process.

In order to identify the intended value, actions are required. For example, organizations can start with a brainstorming session initiated by the manager. Also, when establishing the intended value, a preliminary research may be needed. This research then shows what added value the organization envisions, how this added value will benefit the organization and to what extent. Multiple roles/persons play their part in this process.

The business analyst plays a critical role in understanding the department's current operations and identifying areas for improvement. He or she gathers requirements, analyzes business facts and facilitates the translation of business needs into measurable specifications. This creates a plan of action from the business perspective that serves as input for the final formulation of the use case. It also gradually clarifies which data sources are initially important.

For more complex issues, you need experts on specific components in addition to the business analyst. These experts have in-depth knowledge of day-to-day operations, production processes and market movements, among other things. They offer insights and contribute to the formulation of the use case by providing perspectives, identifying areas of concern and focusing research points. This may even eventually lead to breaking down the use case into manageable pieces for the data science team.

The moment the formulation becomes concrete, it is advisable to involve data engineers and scientists. They help identify relevant data sources and can usually provide more insight into the accessibility and quality of those sources. All in all, this yields a widely supported use case, a sharply formulated goal and an understanding of the source data needed.

This completes the formulation, you would think. But no, one aspect is still missing.

The Data Privacy Impact Analysis is part of the formulation of the use case. A use case that defies all the rules of security and privacy (and the legislation surrounding AI applications) goes down mercilessly. So last but not least, the data privacy officer makes a final judgment, after which the organization can start working with the data, possibly with some adjustments.

The business team also remains remotely leashed in subsequent phases in the MLOps cycle. At the first tangible results, it is assessed whether it meets initial expectations. MLOps is an Agile working method, so we talk about small steps that may require adjustment, about which new decisions are made after the outcome of the first monitoring results. New actions are launched to refine or extend the use case, after which the Business Outcomes cycle is run again and the next cycle is started.

* Depending on the importance of the use case, an executive sponsor may be involved. This person, for example, provides executive-level support, approves budgets and ensures alignment with organizational goals in general.

Data & Machine Learning

The next stage in the MLOps Pipeline, is working with data and developing ML models. This begins with data engineers and scientists identifying the relevant data sources identified in the use case formulation. Data engineers work to extract, load and transform (ELT) the data, ensure the quality and integrity of the data, and make it available for further analysis and model development.

Data scientists then take responsibility for examining the data, developing hypothetical models and testing these models. This is how they determine which model best fits the use case. This can range from simple linear regression models to complex deep learning networks.

During this process, it is essential to maintain constant communication with the business team to ensure that the models are aligned with business goals and to make adjustments as needed. This includes regular iterations and experiments to improve and optimize model performance.

At each step in this process, the data and models must be "versioned", tested and monitored to ensure reproducibility and to identify and fix any problems. This is where tools and techniques such as automated test frameworks, data versioning systems and ML tracking tools are critical.

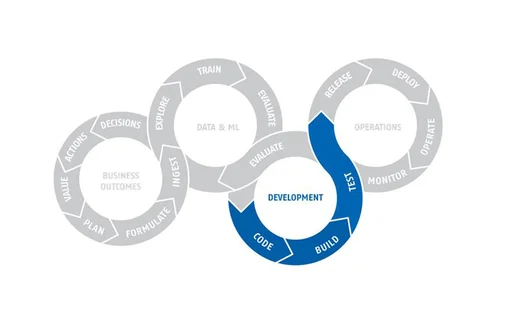

Development

Now that we have completed the important steps of data preparation and Machine Learning, we move to the next step in the MLOps Pipeline: the development phase . This phase involves implementing the Machine Learning model designed and tested during the Data & Machine Learning phase.

Implementation begins with integrating the model into the chosen software application. This could be, for example, an existing business application extended to incorporate the predictive capabilities of the model, or an entirely new product built around the model. This process, often referred to as coding, requires close collaboration between data scientists, data engineers and software developers.

There are numerous ways to implement models, but some common techniques include:

- REST APIs: This is a widely used method, where the model is hosted as a service and provides predictions on demand.

- Batch processing: This is useful when large quantities of forecasts are needed at the same time, for example for reporting or analysis.

- Real-time streaming: This is useful for applications, where predictions are needed in real time, for example for analysis of actual sensor data, fraud detection or personalized recommendations.

After the coding phase, the written code and integrated models are thoroughly tested. This includes both testing the functionality of the software and checking the accuracy and reliability of the model predictions.

Once the code and models are tested and approved, they are built into an executable software product ready for implementation in the production environment. The goal is to create a robust and reliable application that leverages the predictive power of the Machine Learning model to deliver value to the organization.

This development phase prepares the path for the next phase in the MLOps Pipeline: deployment to the production environment and keeping it operational. It is important to emphasize that Machine Learning modeling is an iterative process. This means that models are likely to change and improve over time. The ability to smoothly incorporate new versions of a model into operations without disrupting existing business processes is an important prerequisite before the solution is passed to operations.

Operations

The final phase of the MLOps Pipeline is operations. In this phase, implemented models are put into production and monitored to ensure that they perform as expected and continue to deliver value to the organization. This means that we not only want to know if a model is working correctly from a technical standpoint (e.g. whether it handles requests correctly), but also whether it is still making the right predictions based on the latest data.

Monitoring is an essential part of this phase. We want to know if there are anomalies in the performance of our models, if the data we receive is still in line with the data we used to train the model, and if the model is still providing meaningful predictions or insights. Furthermore, it is critical to determine what the model actually delivers within the business process; what effects does it have?

The organization must be willing to adjust and adapt. A model that performed fantastically six months ago may not work as well now due to changes in data sets or in the underlying patterns of the business. This is where the concept of Model Drift and Continuous Learning comes into play. We must be prepared to retrain, adjust or replace our models as needed. This requires new actions on which new decisions will be made in consultation with the business. In doing so, MLOps has become a closed system that actually aligns with the effort to continuously anticipate changing circumstances and new insights.

Finally, as with any operational environment, we must also plan for exceptions and failures. This means thinking about things like error handling, backup and recovery, and robust security measures to ensure that our models and data remain protected from both inadvertent errors and malicious attacks.

In short, the operational phase of MLOps is about ensuring the reliability, performance and security of our Machine Learning systems in production. And as with the previous phases, close collaboration between different roles (data scientists, data engineers, IT operations and business stakeholders) is essential to ensure that we get the most out of our Machine Learning initiatives.

Read more about MLOps

Are you interested in MLOps? Then also read our first article MLOps: for a perfect Machine Learning Pipeline in which we explain what MLOps is all about. In our third article we take a closer look at the success factors and pitfalls of this methodology. This article will soon appear on Centric Insights.